Motivation

If you’re anything like me, which I suspect you are, over the years you’ve probably amassed a large collection of photos. With over 73,257 photos across 707 directories representing 15 years of digital photography, finding a particular photo is a challenge.

While most photos have some combination of date and time or even GPS information, most people don’t spend their lives memorizing times and dates of photos. We need to augment the “metadata” with something more human.

Wouldn’t it be great if we could search for photos by features that people remember? Features, such as the color of the sunset or the blue of the sky in the picture they’re looking for?

A Three Part Solution

Our solution needs three parts: First, we need robust search engine infrastructure. Second, the photos need to be cataloged and added to search engine’s index. And finally, we need a simple way for users to search through tens of thousands of images by metadata, colors or any other feature we choose to add to the index.

Part 1: Search Infrastructure in a Box

Prerequisites: You will a need working Docker installation.

One of the most flexible search tools available today is Elasticsearch. This provides powerful indexing, query and aggregation functions out of the box. Best of all, you can access all of this via a http API.

In addition to Elasticsearch, we’ll also bring up Kibana, a visualization tool to explore our data.

Loading up with Docker

Instead of setting up these tools individually let’s use Docker to bring up the official containerized distributions of each of these components in seconds (with functional defaults.) Docker’s new docker-compose command starts and links multiple containers together based on the configuration in a docker-compose.yml file.

The configuration file below tells Docker to pull down the official images for Elasticsearch and Kibana from Docker Hub, set up a custom configuration file and connect the Kibana container to the ElasticSearch container.

You can clone this repo to get started on the photo search project.

Start Your (search) Engines

From the project directory simply issue docker-compose up and watch docker create your search engine. (You can use docker-machine on OSX if you need a docker host.)

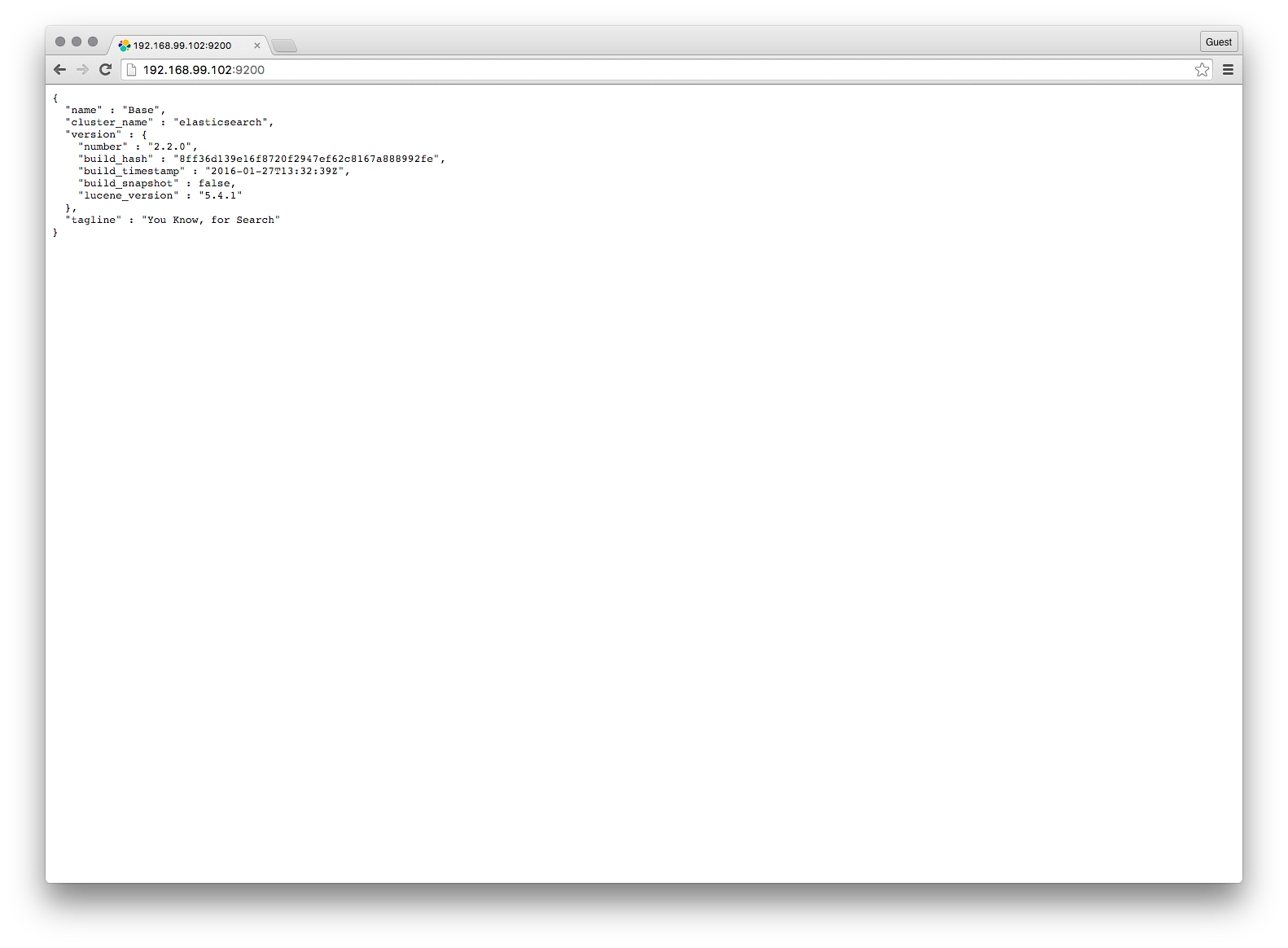

If you go to http://<dockerhost_ip>:9200 a JSON response with the name of the cluster and Elasticsearch version information verifies that Elasticsearch is up and running.

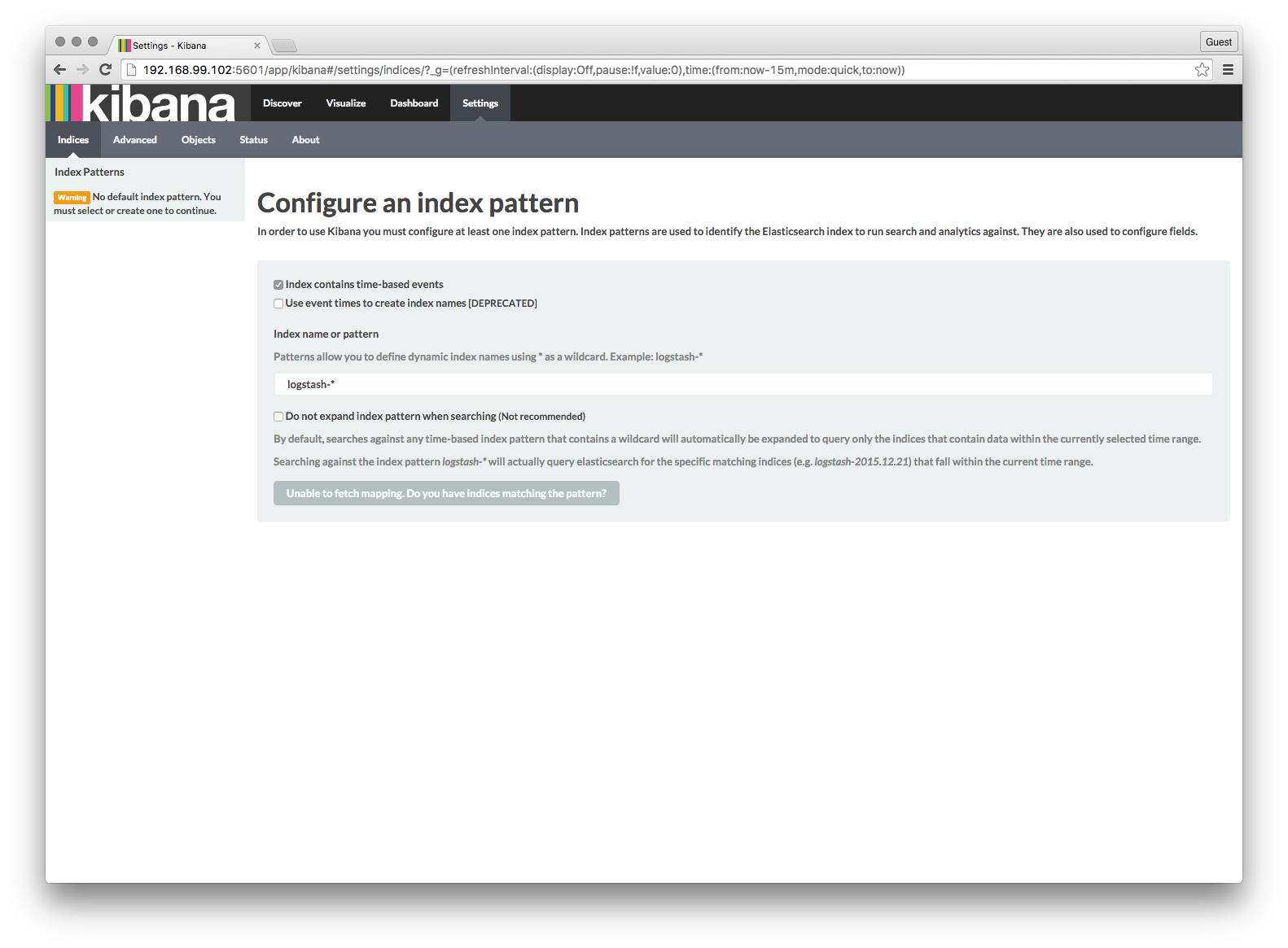

Verify that Kibana is running and that it can connect to Elasticsearch at http://<dockerhost_ip>:5601

Next Steps

We’ve created a powerful foundation for search that can both handle large amounts of data and be scaled out to service a high volume of requests.

In Part 2, we will extract data from our images and add them to our search index.

PS - You can also check out Part 3, for the AngularJS front end for our search engine.