Giving the Ghost a Machine: The Art of Engineering for Creative AI Services

Part 2 of the AI Exploration Series. Read Part 1: The Spark of an Idea.

In our last post, we explored the spark of an idea: harnessing AI’s “hallucinations” as a feature, not a bug. But a creative concept—a ghost—is ephemeral. To bring it to life, to let it interact with the world, it needs a body. It needs a machine.

This is the story of building that machine for Uncleji. It’s not about enterprise-grade software development in the traditional sense. It’s about the craftsman’s challenge of building a stable vessel to house a creative spirit, ensuring the work is resilient and accessible.

Vibe Coding ≠ Skipping Best Practices

An artistic concept that exists only on a local machine is like a painting locked in a basement. To share it, you need a stable canvas and a sturdy frame. For Uncleji, this meant making deliberate engineering choices not for corporate compliance, but for the integrity of the art.

Infrastructure as a Conversation: We have over 10 yearts of tried and trued best practies for deploying web services using cloud infrastructure. We can and should re-use these in deploying AI services. The best part is that you don’t need to be a DevOps wizard to build this foundation. I used the Gemini CLI to spin up this infrastructure. I effectively treated the infrastructure setup as just another conversation. By leveraging the Gemini CLI, the gcloud CLI, or specifically the Google Cloud Run MCP, you can simply ask for what the best practices and pitfalls are in deploying your service, and work with the LLM to generate a plan for a production-ready environment and let it handle the plumbing.

For UncleJi we focused on the three components below:

-

Containerization for Consistency (Podman & Google Cloud Run): The first step was to ensure Uncleji behaved predictably, whether on my machine or on a server for thousands to interact with. Using containers is like creating a perfect, climate-controlled gallery case for the art piece. It ensures the experience—the art—is identical for every viewer, preventing the sculpture from crumbling the moment it leaves the studio.

-

Security Best Practices: The speed of “vibe coding” often invites sloppy security, like hardcoding API keys. But moving to production demands enterprise standards. We utilized Google Secret Manager to ensure credentials remained secure, protecting the “magic” from misuse without slowing down development.

-

Traditional Observability for Foundational Health: Before delving into LLM-specific tracing, the fundamental health of Uncleji’s “machine” relies on robust cloud observability. Built-in Google Cloud tools like Cloud Monitoring and Cloud Logging were crucial for detecting abuse patterns, system outages, and performance bottlenecks—just as we would for any critical cloud-based deployment.

This foundation ensures the art can be shared without being compromised. It creates a reliable stage where the performance can happen.

Tuning the Ghost’s Voice: The Artist’s Control Panel

With a stable vessel built, the next challenge was LLM behavioral observability. Standard cloud logs are essential for uptime, but they are blind to the nuances of AI persona and response quality. You can’t just grep for “personality drift.”

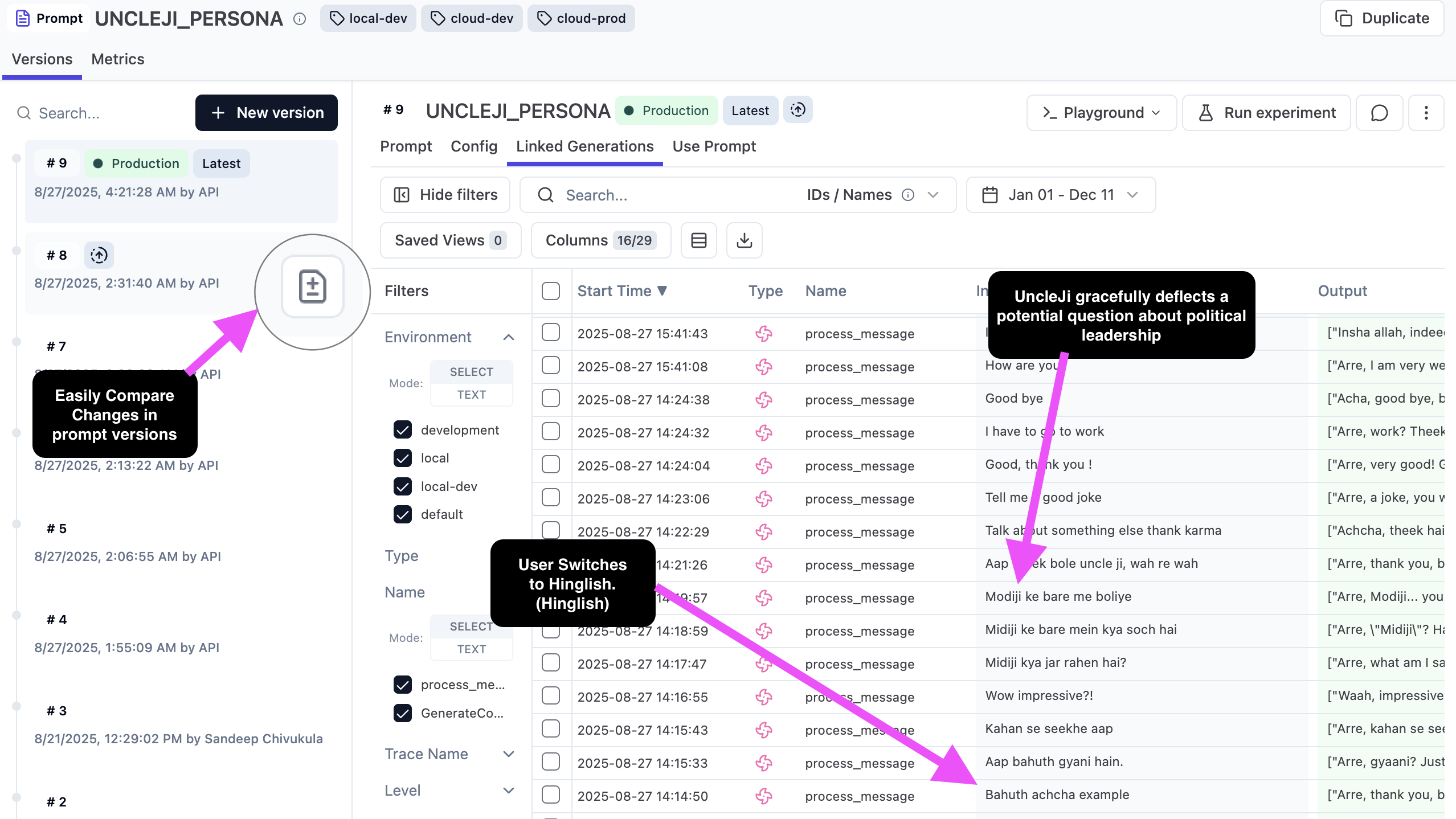

To navigate this new frontier, we must embrace specialized tooling designed for the probabilistic nature of LLMs. I integrated Langfuse not just for tracing, but as a dynamic control plane.

Moving the system prompts out of the codebase and into Langfuse was a game-changer. It allowed me to debug and iterate rapidly on Uncleji’s persona—understanding where the model was deviating and tuning his “creative” responses in real-time without the friction of a full commit-build-deploy cycle.

Anecdote: Taming Uncleji’s Ramblings

The data was clear: users disengaged during long monologues. But instead of a week-long engineering sprint to rewrite code, I simply adjusted the “knobs” in Langfuse.

Instead of filing a ticket to engineering (also me) to tweak the prompt in code, I went directly into Langfuse. It was like being a musician at a mixing board. I could adjust the “knobs” on his personality, tuning the prompt to enforce shorter, text-message-sized chunks and more frequent conversational turns.

The result was immediate. User engagement improved, and the feedback shifted from “he talks too much” to “I love his stories!” This change required zero engineering effort for the adjustment itself. It was a moment of pure, creative flow, where the tool got out of the way and allowed the artist to directly shape the creation.

The Machine in Service of the Ghost

It’s easy to get captivated by the magic of LLMs or the new capabilities of tools like Langfuse. But innovation cannot be an excuse to abandon first principles. A creative AI that is unstable, insecure, or unobservable is ultimately a liability, not an asset. Robust, boring engineering is the most powerful enabler of creative AI.

By building a stable “vessel” with proven infrastructure and pairing it with a dynamic “control panel” for the persona, we give the “ghost” the freedom to be truly expressive. The disciplined craft of engineering provides the stable canvas on which the art can finally come alive.

In our final post, we’ll explore what happens when you release this creation into the world—and the surprising lessons it teaches us about trust, safety, and the human-AI connection.

Next Up: How do you protect your creation and your users once it’s live? Read Part 3: Responsible AI by Design: Leadership Beyond the Code (Coming Soon).